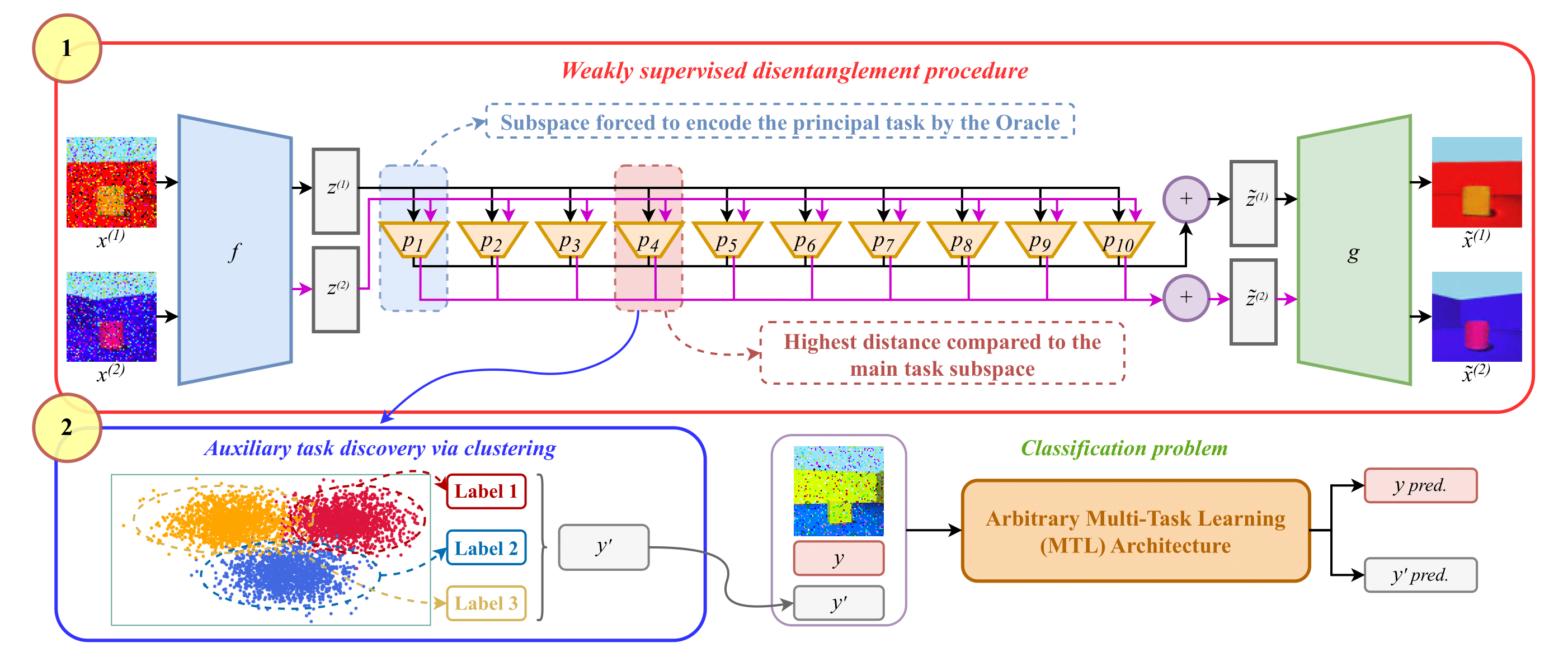

Auxiliary tasks facilitate learning in situations when data is scarce or the principal task of focus is extremely complex. This idea is primarily inspired by the improved generalization capability induced by solving multiple tasks simultaneously, which leads to a more robust shared representation. Nevertheless, finding optimal auxiliary tasks is a crucial problem that often requires hand-crafted solutions or expensive meta-learning approaches. In this paper, we propose a novel framework, dubbed Detaux, whereby a weakly supervised disentanglement procedure is used to discover a new unrelated auxiliary classification task, which allows us to go from a Single-Task Learning (STL) to a Multi-Task Learning (MTL) problem. The disentanglement procedure works at the representation level, isolating the variation related to the principal task into an isolated subspace and additionally producing an arbitrary number of orthogonal subspaces, each of them encouraging high separability among projections. We generate the auxiliary classification task through a clustering procedure on the most disentangled subspace, obtaining a discrete set of labels. Subsequently, the original data, the labels associated with the principal task, and the newly discovered ones can be fed into any MTL framework. Experimental validation on both synthetic and real data, along with various ablation studies, demonstrate promising results, revealing the potential in what has been, so far, an unexplored connection between learning disentangled representations and MTL.

@Article{skenderi2023disentangled,

title = {{Disentangled Latent Spaces Facilitate Data-Driven Auxiliary Learning}},

author = {Skenderi, Geri and Capogrosso, Luigi and Toaiari, Andrea and Denitto, Matteo and Fummi, Franco and Melzi, Simone and Cristani, Marco},

journal = {arXiv preprint arXiv:2310.09278},

year = {2023}

}